Last Update:

A Programmer’s Guide to Performance Analysis & Tuning on Modern CPUs

Table of Contents

They say “performance is king’… It was true a decade ago and it certainly is now. With more and more data the world generates each day, we need more and more computing power to process it.

It used to be the case that some SW vendors preferred to wait for a new generation of HW to speed up their application and did not spend human resources on making improvements in their code. When it’s no longer the case that each HW generation provides a significant performance boost, we must start paying more attention to how fast our code actually runs.

This article is a guest post from Denis Bakhvalov:

Denis is a compiler engineer at Intel. He is the author of the book “Performance Analysis and Tuning on Modern CPUs”. Denis writes his blog easyperf.net and speaks about performance at developer conferences.

What is performance analysis?

I see lots of people rely on their intuition when they try to optimize their application. And usually, it ends up with random fixes here and there without making any real impact on the performance of the application. I believe that finding the right place to fix should be a result of careful performance analysis, not intuition. But even then, it’s only half of the job. The second half is to actually fix it properly.

Often changing one line in the program source code can yield 2x performance boost. Performance analysis is all about how to find and fix this line! Missing such opportunities can be a big waste.

Why do we need performance analysis?

Modern CPUs are getting more and more cores each year. As of the end of 2019, you can buy the top bin server processor which will have more than 100 logical cores. This is very impressive, but that doesn’t mean we don’t have to care about performance anymore. Very often, application performance might not get better if you assign more cores to it. Understanding why that happens and possible ways to fix it is critical for future scaling of the product. Not being able to do proper performance analysis and tuning leaves lots of performance on the table.

It’s so tempting to ask: “Why HW does not solve all our problems? Why compilers do not solve all our problems?” The short answer is: they do certainly help, but they can’t solve all of the problems. Modern CPUs execute instructions at incredible speed, but still can’t do much if the instructions that are used to perform the job are not optimal or even redundant. Compilers are usually full of heuristics that work well in general but do not cover all the corner cases; it’s simply impossible.

Given that, we as maintainers of our code have no excuse for blaming compilers or HW and not doing performance-related work ourselves. I’m sure that the value of careful performance analysis and tuning will only increase over the upcoming years.

Who needs performance analysis?

Modern CPU is a very complicated thing. But relax, there is no single person in the world who understands all the aspects of how modern multicore CPU works. Unfortunately, that means that the topic of performance analysis is quite complicated with all sorts of unfamiliar metrics and terminology. That’s why I always strive to keep the things simple in my blog. I believe that there is a simple bridge to the world of performance analysis.

“Okay, okay, I buy it, but the topic seems too big, where should I start?” My blog (easyperf.net) covers lots of performance-related topics very extensively, but for someone just starting, this post will be a good overview.

Later in the article, I will touch on the following 4 pillars of the subject:

1. How to configure machine and measure performance properly?

2. What features for performance analysis does HW provide and how SW tools interact with them?

3. Essential methodologies in performance analysis.

4. How to address typical performance problems.

Take it as a roadmap if you will.

Conducting fair performance measurements

There are many different features in HW and SW that are intended to increase performance automagically. But some of them have non-deterministic behaviour. Take turbo boost feature, for example: if we start two runs, one right after another on a “cold” processor, first run will possibly work for some time in overclocked mode (read: work faster), but the second run will operate on its base frequency without entering the turbo mode. That’s where variation in results might come from.

Since we have little control over such features, it makes sense to disable them for the time of the experiment to receive more consistent results. Ideally, in such cases, we want all the potential sources of performance non-determinism to be disabled in a system. This article is an attempt to bring all the tips together, provide examples and give instructions on how to configure your machine correctly.

Profiling applications

Probably, the oldest method for doing performance analysis is the code instrumentation. We all did it many times. Remember when you insert some printf statement at the beginning of the function just to count the number of times the function was called? Ha, me too. This is the easiest and likely the most precise and verbose technique to analyze the performance of the application. Yet code instrumentation has serious disadvantages. In particular, large overhead and the need to recompile the app every time we want to count something different. People do not use manual code instrumentation these days very often.

So, during the years, new methods for doing performance analysis have been developed. One of them is based on performance monitoring interrupts (PMI) and is known as “profiling”. The easiest way to look at it is the following. If you use a debugger and will stop the program every second and record the place where you stop, you will get a collection of the samples. If you then aggregate all the samples and make a histogram, it will show you where your program spends time the most. This is the oversimplified description of what profiling tools are doing, but the idea is similar. There are automated tools like Linux “perf” and “Intel Vtune” that record thousands of interrupts (samples) per second while your program is running and then aggregate information about them.

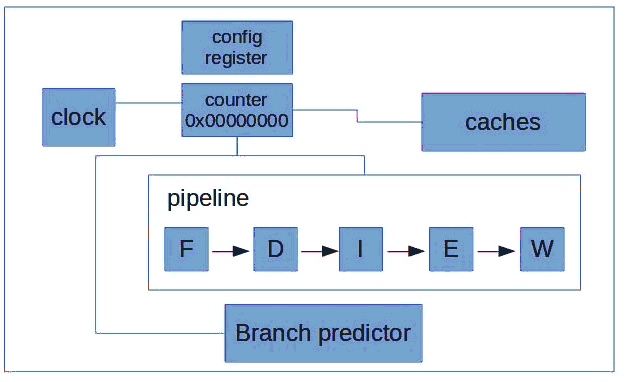

The underlying component that allows this to happen is the Performance Monitoring Counter (PMC). It allows counting different events. A simple example of using PMC can be count how much assembly instructions were executed since the beginning of the application. I.e. we can configure it in such a way that with every executed assembly instruction our HW counter will be incremented by one.

For a profiling case, PMC can be used in a little bit more sophisticated way. Let’s imagine our CPU runs at 1GHz, that’s 109 cycles per second. To interrupt the program each time after one million (106) cycles (at the frequency of 1000 samples per second) we would do the following steps:

1. set counter to -1'000'000

2. enable counting

3. wait for the overflow which will be issued by the CPU

3.1. disable counting when it happens

3.2. catch the PMI

3.3. inside the interrupt handler capture instruction pointer (IP).

4. go to step 1

Now, if we aggregate all the collected IPs together, we will know the hottest spots in our program.

For a ground-up introduction on PMUs read this blog post. For underlying mechanics of profiling with Linux “perf” tool read this article.

While profiling being the most popular use case of utilizing HW performance monitoring capabilities, it’s not the only one. If you want to know what other advanced features modern CPUs provide and how to utilize them take a look at the following articles: this, this and this.

Finally, the concept of tracing might be very helpful for performance analysis as well. If you’re familiar with Linux strace/ftrace tools this won’t be new to you. While interrupt-based monitoring by definition skips a significant number of events we are interested in, tracing captures them all. You can view it as a hybrid solution of code instrumentation and interrupt-based monitoring. Tracing technologies take the best of both worlds. It is not that expensive as instrumentation but allows to capture a lot of information about the execution of the program. Processor tracing capabilities in modern CPUs allows tracing almost every assembly instruction at a relatively low overhead. Read more about Processor Traces (PT) here.

Performance analysis methodologies

In the most straight forward case identifying hotspots of the application will be all that you need. You might see some part of the code which shouldn’t be consuming that much time actually does so. In such case you can implement high-level transformation to optimize the runtime. For example, this could be a situation when you see some redundant work is done and can be avoided in certain scenarios.

However, when all the low-hanging fruits (high-level optimizations) are implemented and you still need some improvements in order to meet the requirements, you need additional information, not just the hotspots. This is what you can consider as “tuning” (low-level optimizations). Modern CPUs have support for such tuning as well.

It’s important to understand that even with the best support CPU might provide, it can’t do miracles if the application has major performance issues. For example, if the program does sorting with BubbleSort, it’s no point to even look into advanced CPU performance metrics, we need to fix the major problem first.

Now, let’s demystify what do I mean by low-level optimizations. The compiler usually performs low-level transformations and often target a particular platform on which the code will be running on. This is not something a programmer typically do, but which can significantly improve the runtime performance of the program. Well-known examples of such transformations are:

- Function inlining

- Loop unrolling

- Vectorization

There are many existing methodologies to do performance analysis, but not so many of them are robust and formal. One can go a naive path of just profiling the app and trying to grasp through the hotspots hoping to find something there. This often leads to random experiments in which sometimes you can be lucky. So, when doing microarchitectural optimizations (another term for low-level analysis), we’ve better rely on something robust and proven.

One of such methodologies is called Top-down Microarchitecture Analysis Method (TMAM). This is an iterative process of identifying the source of the problem, finding the exact place in the code where the issue occurs and fixing it. The process is designed in a way to characterize the bottleneck of the application by putting it in one of the 4 buckets: “Retiring”, “Bad Speculation”, “Front-End Bound” and “Back-End Bound”. After that you keep drilling down inside a single bucket to find specific type of event that is limiting the application’s performance. When you finally found what type of bottleneck you are dealing with, you need to run the app again and locate places where this particular type of event is triggered. After the issue is fixed you start over the TMAM process until you get the performance you are looking for.

Analyzing multithreaded apps.

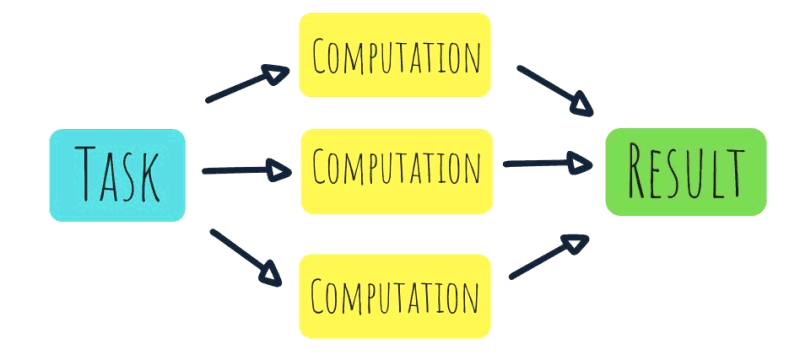

Multithreaded applications have their own specifics. Certain assumptions of single-threaded execution are invalid when we’re dealing with multiple threads. For example, we can’t no longer identify hotspots by looking at a single thread. Profiling a thread which is waiting during most of the running time won’t sched light on the reason why our multithreaded application is not scaling well.

Another example is: When dealing with the single-threaded application, optimizing one portion of the program usually yields positive results on performance. However, it’s not necessary the case for multithreaded applications. There could be one thread which does some very heavy operation, and which acts as a barrier for all the others. I.e. even though most part of the threads already finished their job, the process will not exit until there is one thread that is still running.

But the most important and complex feature of multithreaded applications is locking. Having threads communicate efficiently is essential on the way to fully utilize all of the compute power in the system. Like with functions, some locks could be accessed more frequently than the others, so it’s important to know which locks are hot and focus on those. Also, there are interesting effects like false sharing that do not occur in the single-threaded world.

If you want to know more about different aspects of how to analyze the performance of multithreaded applications, I wrote a series of articles about that topic.

Tuning examples

According to my personal experience, ~90% of all optimizations can be done on the source code of the application without touching the environment, like a compiler, OS settings, etc. If you choose to master the skill of performance tuning, you’ve better be familiar with the recipes for typical performance problems.

At the beginning of 2019, I started making challenges with the goal of practice tuning existing benchmarks. There you can find examples of possible optimization opportunities with a detailed description of how they were found. Feel free to use them as templates when optimizing your application.

I hope this was useful and I will be extremely happy if this will help developers to optimize their code.

Final words:

I wrote a book titled “Performance Analysis and Tuning on Modern CPUs”, with a goal to educate software developers to better understand their applications’ performance on modern hardware. It goes into low-level performance details including CPU microarchitecture, different methods to analyze performance, and what transformations can be done to improve performance. The PDF version is available for free, but you can also buy a paper version on Amazon.

I've prepared a valuable bonus for you!

Learn all major features of recent C++ Standards on my Reference Cards!

Check it out here: