Last Update:

constexpr Dynamic Memory Allocation, C++20

Table of Contents

constexpr has become a major feature for compile-time programming in C++. Introduced in a simple form in C++11 evolved into almost another “sub-language”, an alternative to regular template code. In C++20 you can even use std::vector and std::string in constexpr context!

In this article, I’d like to discuss constexpr memory allocations, a building block for std::vector. Let’s see how this feature works and see its limitations.

Background for constexpr Allocations

First of all, it’s essential to understand why do we want such a feature? After all, constexpr functions perform some calculations and return the results…. but how about using something more advanced like containers from the Standard Library?

In C++17 we can write the following code:

#include <array>

template <std::size_t N>

constexpr int naiveSumArray() {

std::array<int, N> arr { 0 };

for (std::size_t i = 0; i < arr.size(); ++i)

arr[i] = i+1;

int sum = 0;

for (const auto& elem : arr)

sum += elem;

return sum;

}

constexpr int smartSum(unsigned int n) {

return (n*(1+n))/2;

}

int main() {

static_assert(naiveSumArray<10>() == smartSum(10));

static_assert(naiveSumArray<11>() == smartSum(11));

static_assert(naiveSumArray<23>() == smartSum(23));

return 0;

}

Play with code @Compiler Explorer.

In the above code, thanks to constexpr std::array, we can perform computation in “regular” code, rather than template magic.

Notice that we still have to pass arguments as template parameters: naiveSumArray<10>().

Can we improve in in C++20?

C++20 and Transient Allocations

In C++20 (and also in the previous Standards), we can see that more and more types and functions are marked with constexpr. For example, most of the Standard Algorithms can now (C++20) work at compile time! But there’s still a problem with containers like vectors, string or maps.

One of the main issues that we have to solve is dynamic memory allocation, as most of the containers require it to store their elements.

The main topic to understand is transient allocation. It means that you can allocate memory in a constexpr expression, but then the mem block must be released at the end of that expression. That way, the compiler can adequately track all the allocations, and I guess it’s much easier to control and implement.

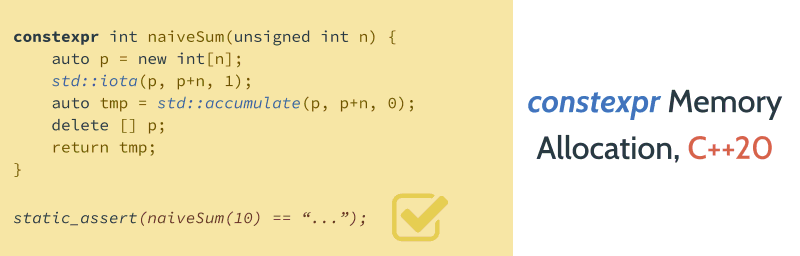

Let’s try to convert our previous example to C++20:

#include <numeric>

constexpr int naiveSum(unsigned int n) {

auto p = new int[n];

std::iota(p, p+n, 1);

auto tmp = std::accumulate(p, p+n, 0);

delete[] p;

return tmp;

}

constexpr int smartSum(unsigned int n) {

return (n*(1+n))/2;

}

int main() {

static_assert(naiveSum(10) == smartSum(10));

static_assert(naiveSum(11) == smartSum(11));

return 0;

}

Play with the code @Compiler Explorer.

The code is now the same as in the runtime version! There’s no need to pass template arguments.

As you can see, the function naiveSum uses dynamic allocation to create an array of n elements and then it calls iota to generate the values. Later the code invokes std::accumulate (marked with constexpr since C++20) to calculate the sum.

To evaluate this function at compile-time, the compiler has to track all the allocations and guarantee that they end before the function returns; there can be no undefined behaviour.

Leak Detection

We can play a little game and ask the compiler to be also a leak detector.

What if we don’t delete the mem block?

constexpr auto naiveSum(unsigned int n) {

auto p = new int[n];

std::iota(p, p+n, 1);

auto tmp = std::accumulate(p, p+n, 0);

// no delete[] p; here!

return tmp;

}

In GCC we’ll get the following error:

error: '(naiveSum(10) == smartSum(10))' is not a constant expression because allocated storage has not been deallocated

4 | auto p = new int[n];

Have a look @Compiler Explorer.

The deallocation tracking is quite smart as you can even deallocate memory in some other function (as long as it’s in the same context for a constexpr evaluation):

constexpr void delFunc(int* p)

delete [] p;

}

constexpr int naiveSum(unsigned int n) {

auto p = new int[n];

std::iota(p, p+n, 1);

auto tmp = std::accumulate(p, p+n, 0);

delFunc(p);

return tmp;

}

See @Compiler Explorer.

It can also track when you try to deallocate with delete instead of delete[]:

constexpr auto naiveSum(unsigned int n) {

auto p = new int[n];

std::iota(p, p+n, 1);

auto tmp = std::accumulate(p, p+n, 0);

delete p; // oops!

return tmp;

}

Then we’ll get the following error:

error: non-array deallocation of object allocated with array allocation

See @Compiler Explorer.

Implementing a constexpr Buffer class

As another example, we can try implementing a simple buffer (almost a vector!) class:

template <typename T>

class Buffer {

public:

constexpr Buffer(size_t n) noexcept : size_(n), mem_(new T[n]) { }

constexpr ~Buffer() noexcept { delete [] mem_; }

constexpr Buffer(const Buffer& other) noexcept : size_(other.size_) {

// ...

}

constexpr Buffer(Buffer&& other) noexcept {

// ...

}

constexpr Buffer& operator=(const Buffer& other) noexcept {

// ...

}

constexpr Buffer& operator=(Buffer&& other) noexcept {

// ...

}

constexpr T& operator[](size_t id) noexcept { return mem_[id]; }

constexpr const T& operator[](size_t id) const noexcept{ return mem_[id]; }

constexpr T* data() const noexcept { return mem_; }

constexpr size_t size() const noexcept { return size_; }

private:

T *mem_ { nullptr };

size_t size_ { 0 };

};

And use it:

constexpr int naiveSumBuffer(unsigned int n) {

Buffer<int> buf(n); // almost a vector class!

std::iota(buf.data(), buf.data()+n, 1);

return std::accumulate(buf.data(), buf.data()+n, 0);

}

Play with code @Compiler Explorer.

See also an excellent use of std::iota and std::accumulate - constexpr algorithms from the Standard Library!

More Details

Let’s now see some details from the proposal P0784R7:

During an evaluation of a constant expression, a call to an allocation function is always omitted. [ Note: Only new-expressions that would otherwise result in a call to a replaceable global allocation function can be evaluated in constant expressions (see [expr.const]). — end note ]

What can be called:

- a new-expression (7.6.2.4), unless the selected allocation function is a replaceable global allocation function (16.6.2.1, 16.6.2.2) and the allocated storage is deallocated within the evaluation of e;

- a delete-expression (7.6.2.5) unless it deallocates a region of storage allocated within the evaluation of e;

- a call to an instance of

std::allocator<T>::allocate(allocator.members), unless the allocated storage is deallocated within the evaluation of e;- a call to an instance of

std::allocator<T>::deallocate(allocator.members), unless it deallocates a region of storage allocated within the evaluation of e;

It seems that we have a limited set of allocation techniques that we can use: it’s mainly new and std::allocator::allocate.

Limitations

From the above examples and investigation, we can learn that the main issue with constexpr new is that the memory allocation cannot “go outside” the constant expression… and thus you cannot use it, for example, for creating lookup tables.

One trick would be to somehow copy the result into std::array:

template <size_t N, typename T>

constexpr auto prepareLookup() {

Buffer<T> buf(N) = CommonCodeForRuntime(N);

std::array<T, N> out;

std::copy(buf.data(), buf.data()+N, out.begin());

return out;

}

Notice CommonCodeForRuntime can be a regular constexpr function that can also be shared in runtime context.

Thanks @botros__fadi for discussions on lookup tables over the weekend :)

Summary

The initial version of this article was published months ago for Patrons! If you want to get early previews, exclusive material, free ebooks, join C++ Stories @ Patreon.

In this article, we discussed constexpr dynamic memory allocation. This is a new feature in C++20 and allows to have not only compile-time containers - like arrays but also use variable-length containers. And this functionality is essential for other features std::vector and std::string.

The main limitation is that the memory has to be deallocated in the same context.

You can read about the reasoning and more details in the following paper: P0784R7.

And as always, we’re backed up by a C++ Weekly episode on the same topic: Episode 188

As of March 2021, this feature works in all major compilers:

| GCC | Clang | Visual Studio |

|---|---|---|

| 10.0 | 10.0 | Visual Studio 16.9 |

I've prepared a valuable bonus for you!

Learn all major features of recent C++ Standards on my Reference Cards!

Check it out here: